Citrix Machine Creation Services as of XenApp/XenDesktop 7.9 provides the ability to write to memory with overflow to disk just like what is available with PVS using RAM w/ overflow to HDD. This greatly decreases write I/O so you don’t have to rely as much on the underlying storage or worry about hitting a storage bottlenecks as you scale the desktop environment.

Previously with MCS, temporary writes went to the delta/differencing disk attached to the VM. You now have the option of using temporary memory (RAM) to handle the caching of writes and a temporary disk in the event the RAM cache becomes full. When overflowing writes to a disk keep in mind that you don’t want this to be the point of a bottleneck so size the environment properly and place temporary disks on storage that won’t pose a big degradation in performance. The more RAM you assign to the memory cache the less chance writes will ever reach disk. You could reduce IOPS by as much as 95% depending on how much RAM you can assign to the cache!

Temporary memory 256MB for VDI (desktop) and 2GB for Session Host (server) machines is recommended, which falls in line with what is recommended when using PVS RAM cache. If your VDI machines are 32-bit, 256MB is probably optimal. This is because the OS on 32-bit is limited to 4GB RAM maximum with 2GB assigned to kernel-mode for things like handling device driver code, kernel structures and so on that are either on paged or non-paged memory pools. When configuring MCSIO RAM on these VMs, the memory is shared with the non-paged pool. Given that MCSIO RAM can grow up to 50% larger than what you specify/allocate, you need to ensure the non-paged pool will always have enough memory or risk the machine crashing.

The temporary disk size is recommended to equal the free disk space of the VM plus the page file size. I normally set a 20GB cache size and get away with it but it all depends on your own environment.

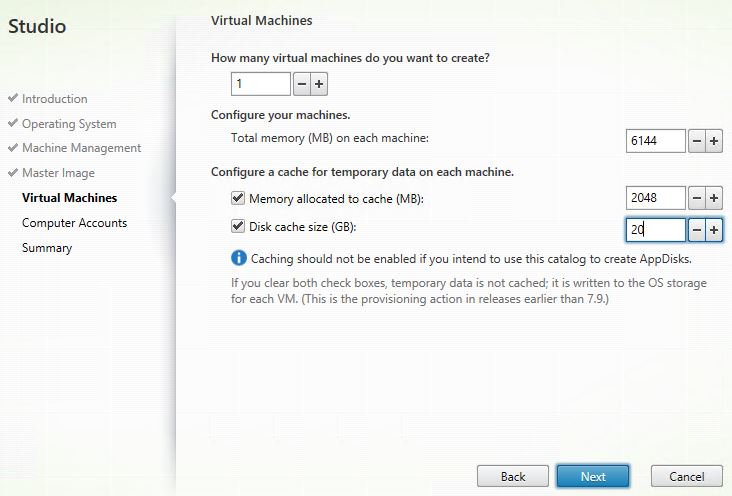

With your Citrix Studio console open, running a XenApp or XenDesktop 7.9 farm, create a new Machine Catalog provisioned by MCS. Existing standard MCS catalogs cannot be converted to MCS using cache as an MCSIO specific driver is installed to the VMs whilst being provisioned. You have the following options during the MCS Machine Catalog creation wizard:

Memory allocated to cache (MB) – Provide a value in MB for how much RAM you want to assign to the cache. You have the option to use only the memory cache but be aware if the memory cache runs dry your VMs will become unstable and likely freeze or blue screen. For this reason it is recommended to deploy a temporary disk cache as a backup just in case.

Disk cache size (GB) – Provide a value in GB for how much disk space you want to dedicate to the disk cache. You can choose to create only a disk cache and this means VMs will perform like they always have done producing the same IOPS as they would when provisioned without any cache. The good thing about using a disk cache is that you can separate writes on to different storage away from the base image and differencing disk. It is always recommended to create a disk cache when using RAM cache just to be safe in the event that the RAM cache runs out of free space.

Note that you also have the option to not configure any cache so MCS machines will be created and operate as they always have done.

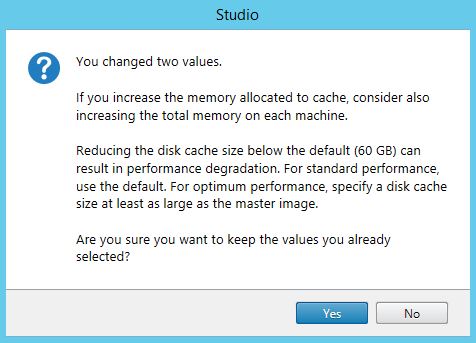

Click Yes. Complete the MCS Machine Catalog creation wizard. You will now have machines optimized for writes.

Click Yes. Complete the MCS Machine Catalog creation wizard. You will now have machines optimized for writes.

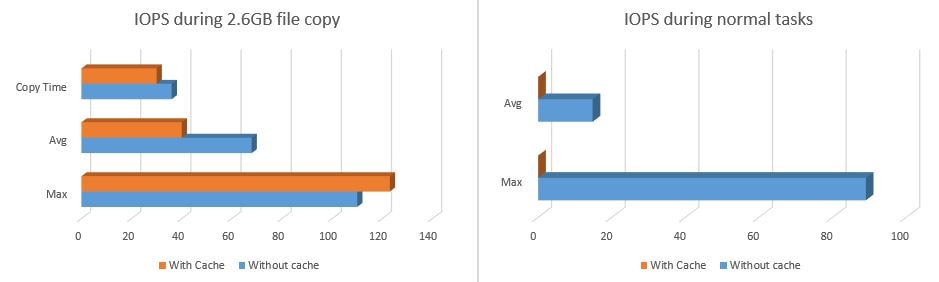

So using a simple file copy operation you can see the difference between a 2.6GB file copied to an MCS machine using cache (1GB) vs. one without cache. Reaching a max of 110 write IOPS per second on a 15K spindle.

What about general daily tasks such as internet browsing and file browsing? You can see results below comparing normal operations on an MCS provisioned machine using cache vs. one without. There is no direct hit on IOPS when the cache is in use!

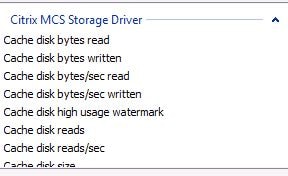

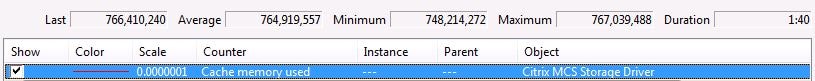

Using Performance Monitor on the Windows VDA you have a number of different counters that you can use to track performance and usage of the MCS cache. One particular useful counter is Cache memory used. This counter shows how much of the cache memory is in use.  A simple test of copying a 710MB file to the VDA increased the Cached Used performance counter to accurately report what was now held in cache. Also note that 0 write IOPS were produced during the file copy.

A simple test of copying a 710MB file to the VDA increased the Cached Used performance counter to accurately report what was now held in cache. Also note that 0 write IOPS were produced during the file copy.

This is a great improvement for MCS provisioned machines and now puts MCS right up there beside PVS when it comes to IOPS performance.

Additional points:

- MCSIO works on Desktop/Server non-persistent and Session Host machines. Citrix Personal vDisks do not work with MCSIO ruling out persistent desktops.

- The more RAM you assign to a cache the less IOPS storage will ever have to deal with.

- You can not migrate existing MCS provisioned catalogs to MCSIO, you must recreate them.

- In the event that temporary memory space runs out, the temporary disk storage is used. Most recently used blocks of data are kept in memory for performance and least used are flushed to disk quite like how PVS RAM caching works with overflow to HDD.

- MCSIO can limit the amount of memory it uses in the event the VM becomes overloaded – the non-paged pool memory consumption on a machine is monitored and if it reaches 70% of available memory action is taken to suppress MCSIO memory usage annd instead write to disk until the non-paged pool memory consumption drops.

- Disk cache and memory cache size cannot be changed once the Machine Catalog has been created.

Michael McAlpine

October 26, 2016Do you have any rules for ram/disk allocation to MCS temporary data cache? I am considering flipping this on when we migrate from Server 2008r2 to Server 2016 and the disk space = cache space recommendation from Citrix seemed completely arbitrary.

George Spiers

October 26, 2016I would absolutely consider using RAM/disk cache with MCS when you migrate. I normally assign around 2GB RAM to Server VDAs. The more RAM you can safely assign to machines the less chance IOPS will ever have to fall back on to disk. That being said you don’t necessarily want to over subscribe machines with RAM for the sake of it so you should consider some testing with users on a box and make use of the MCS performance counters to track cache usage. Things you need to factor in are how many users will be connected to one Server VDA, what applications will be used, do the applications cache much data, how often servers are set to restart etc.

Michael McAlpine

October 27, 2016When we built our 7.x farm we basically guessed at resources and we guessed way too high so I have quite a bit of free memory on my hosts. Our our 08r2 machines currently support 19-20 users 4 vcpu’s 16 GB of memory. I’m really not sure how I am going to build the Server 2016 RDSH machines because I am not aware of any recommendations for builds. I don’t see more than 650 concurrent connectsions, so I am planning on trying to decrease the number of RDSH servers I have from 30 to 20 by beefing up my 2016 VM’s. I expect to see 30-35 users per machine.

Have you seen any guides or do you have one in the works for Server 2016 and XenApp desktop/application hosts?

George Spiers

October 27, 2016I haven’t got round to working with Server 2016 yet but cannot imagine deployment best practices will be too different from 2012 R2. There’s a whole blog post on optimising 2012 R2 at https://www.citrix.com/blogs/2014/02/06/windows-8-and-server-2012-optimization-guide/. Any recommended optimisation should be evaluated individually to determine if implementing will have a positive outcome. Server 2016 is supported right now with XenApp 7.11.

Michael McAlpine

October 28, 2016Thanks, I’ve done testing and I have a catalog with the Server 2016 VDA. Excluding OS optimizations, user validation, and KMS licensing, Server 2016 is working well in my 7.11 environment… so kudos to Citrix for day one support. I expect to roll over to Server 2016 in Jan/Feb depending on user testing and acceptance.

George Spiers

October 28, 2016Sounds good! Good luck 😉

Cenk

January 19, 2017Hi, have you had to change the swap file within the OS at all ? I am assigning 16gb RAM, 4gb cache and 100gb (size of VM) to disk cache. Any suggestions would be appreciated. OS is Server 2012R2, Published Apps on XA 7.12.

George Spiers

January 19, 2017I remove system managed pagefile’s and have them manually configured with a min/max size. The max size equaling the amount of RAM on the VM. If you don’t care so much about performing full memory dumps you could decrease the size of the page file. I’d recommend not allowing the pagefile to be system managed though.

Oykleppe

February 15, 2017Hi

On a setup with only local SSD storage, will the Memory cache make any noticeable impact for the users?

If i used shared storage or spinning disks maybe. But with only local SSD it wouldn’t make much difference anyway?

George Spiers

February 15, 2017Local SSD storage is great. I wouldn’t use RAM cache unless the virtual machines were doing major IOPS heavy jobs or I wanted more density. If you have workers who run heavy IOPS intensive workloads or you are looking to maximise the VDA density per SSD, then RAM cache can certainly help. It all depends on your requirements

Pavan

July 17, 2017Hello George,

Do we need to add a additional hard drive to the master image for the machine-creation-services-storage-ram-disk-cache catalog? Or just add one and the process will create for itsel? Thanks, Pavan

George Spiers

July 17, 2017Hi Pavan, the Gold VM does not have to have an additional Hard Drive. When you run through creating a Machine Catalog using MCS, there is a tick-box option to include an HDD in the build which will act as your overflow disk.

Matheen

September 28, 2017Hi George,

Thanks for the article. If I use MCS full clone dedicated desktop, Would I be able to use MCS IO? I have dedicated VDIs which are running slow. My intention is to create a master image, convert them to Full clone dedicated VDI and use MCS IO. Not sure if this can be done.

George Spiers

September 28, 2017Yes certainly, it can be done on XenDesktop 7.9 and above releases.

Richard Hughes-Chen

October 12, 2017Does MCS support a 2nd disk as part of the Master image? There is a application which requires a drive other than C: to be available. Not talking about a cache disk.

George Spiers

October 12, 2017Nope – you would have to create those disks manually yourself once the VMs have been created.

Shaun

November 25, 2017I’ve enabled MCS RAM cache. What is total memory mean when creating a MCS catalog? How much memory would each of your VDA’s report on having in vSphere? I’ve set a total of 14GB and 4GB of RAM cache, but when looking on vSphere it only reports on having 10GB of RAM. Is this correct?

George Spiers

November 25, 2017No not heard of that before. If you specify total ram as 14GB the VM should receive 14GB.

Joe

April 17, 2018I’m trying to build a new catalog with MCSIO. But i only have the option to create the the RAM cache. The disk cache check box is grayed out for me. What am I missing?

Running 7.15 fyi

George Spiers

April 17, 2018What Hypervisor and what Gold Image OS type are you using? Is this the first catalog you have tried to create?

Anonymous

November 12, 2018I have the same problem. I use “ESXi 6.5 Update 1” and “Windows 7”.

Rahul

May 21, 2018Hi George,

I need to create dedicated MCS catalog, If I choose both option cache RAM and Cache HD, differencing and identity disk will also be created automatically, right?

So I have 2 questions here:

How the customizations/changes will saved to differencing disk as all the writes will be written to cache RAM/HD?

After restart cache RAM and HD will be cleared, right?

George Spiers

May 21, 2018Correct and not sure what you mean by customisations? Writes are written to RAM first unless there is no free RAM left, then it goes to the overflow disk. Both disk and RAM is cleared down during reboot, yes.

Rahul

May 22, 2018Does Cache RAM and Cache disk options available for dedicated mcs desktops? I think it’s only for pooled static and pooled random.

George Spiers

May 22, 2018That is correct it works for pooled virtual machines and static virtual machines which are non-persistent.

Rahul

May 22, 2018thanks George, Can you provide some insight into MCS full clone dedicated desktops, like what are the storage considerations and the best practices?

George Spiers

May 22, 2018I suggest you read this: https://support.citrix.com/article/CTX218082

Thomas Lund

August 24, 2018I do not have any local storage on my hosts. Only SAN. I am going to add local storage but I would like to know if anyone has experience regarding HDD vs. SSD. Will SSD add noticable performance or will 15K HDD do the job?

Pingback: My first time: Citrix Machine Creation Services (MCS) | Meine kleine Farm - A Citrix Blog

Deepak Sanadi

December 14, 2018Very Good Article .Sir

Excellent as always Keep up the good work .

Uwe

December 20, 2018First of all: Great article!

How much memory would you allocate for Win10 1809 cache? Trying to size my new Nutanix Cluster… Is in-memory cache still relevant with All-Flash Hosts?

George Spiers

December 21, 2018Good question! I’d normally go for 512-1024MB depending on workload (medium/heavy) for Windows 10. Whilst RAM will generally be quicker than disk, you might not notice a whole lot of difference if you have plenty of flash storage to handle the amount of desktops you deploy. You also might be more pressed for RAM and see the benefit of giving it to the user instead of the write cache. In my latest deployment I am toying with the idea of just going all disk on Nutanix hosts also, and using the extra RAM for the desktops.

Uwe

December 21, 2018That’s what it thought.

My budget allows for 6 GB per VM. Maybe I’ll try what’s best for my users with and without RAM Cache. After all, it’s just recreating of the catalog.

Andy

December 9, 2019Sorry for replying an old post.

I am considering to enable the ram and disk cache on my nutanix cluster as well.

When I read the article from citrix website. they said that the feature is not available when using nutanix host connection. Is there any known issue on this combination?

reference: https://docs.citrix.com/en-us/xenapp-and-xendesktop/7-15-ltsr/install-configure/machine-catalogs-create.html

the part “Configure cache for temporary data”

George Spiers

December 14, 2019Yeah Nutanix say you don’t need it, they don’t expose it in the plugin.

Stephen

January 8, 2019Hi George,

I am running an ESX cluster with XenApp 7.14 and using MCS to deliver 12 XenApp servers. The ESX hosts don’t have local disk so all storage is SAS based SAN. As I am leveraging the same storage as the VMs is there any benefit in turning on memory and disk cache. Seems like I could cause unnecessary disk I/O. I have a 2gb memory cache and a 20gb disk cache. My concern is that prior to implementing MCS I was getting better user density per VM. Have you got any suggestions which may help? Thanks Stephen

George Spiers

January 8, 2019Good question – 7.15 LTSR CU3 has an updated MCSIO driver which fixed a few performance issues that is present in the MCSIO driver that you will be using.

Writing to RAM will be quicker than SAS disks, so that would be beneficial especially under high load such as during morning logon storms.

That way you may get more desktops out of your existing storage if required. At the same time however if you have a lot of storage and IOPS capacity then you might not notice a difference in user experience, and you can reclaim and use that 2GB for more desktops or for your existing desktops.

Stephen

February 8, 2019So you are saying it still important to keep the memory and disk cache? Would it be wise to increase the memory of the machines and memory cache to help get more user density?

George Spiers

February 9, 2019I would certainly recommend RAM cache because it is going to be faster than disk. That said if you are a little RAM constraint you might decide to cache to disk instead and use the RAM you have for dedicated machine/user operations, or to build more VMs. To answer your question around memory increase, I assume you are using Server OS/SBC. More RAM will help but you need to factor in CPU too so that you have no bottleneck there. If you have worked out your NUMA ratios and have plenty of RAM left over, then do use it to your advantage. Monitor your CPU/RAM consumptions and you’ll figure out what you need.

Stephen Arnott

February 9, 2019George do you have any more details on what sort of performance issues we could see with the MCSIO driver in versions older the 7.15 LTSR CU3. If running older versions of XenApp should I consider turning off disk and ram cache?

George Spiers

February 11, 2019Machines could freeze during peak hours. New users cannot log on and existing users may receive errors regarding lack of memory when trying to run programs. Note this isn’t fixed in any release yet. Also note I’ve used MCSIO for hundreds of VDAs and not run into these issues.

Stephen Arnott

February 11, 2019Thanks George,

Off the topic have you seen any issue with Excel consuming all CPU and remediation’s around it.

Cheers,

Stephen

George Spiers

February 12, 2019I haven’t seen that. Are you running Excel 2016? What OS? Assuming the Excel files are not large in size and all it takes is to open a blank workbook for the CPU to be fully consumed..

Stephen Arnott

February 12, 2019Hi George,

Actually Windows Server 2012R2 and Office 2013. Running / loading Excel = no problem. Doing any sort of calculation or data queries Excel struggles and hits 100% CPU,

Cheers,

Stephen

George Spiers

February 12, 2019Plenty of forum discussions about similar issues. All I would suggest is figuring out when the problem was first reported, and tracking what had changed on the image at that time e.g. Windows updates. You could try replicating the same issue on a different image/build a test image and gradually load components on it whilst repeatedly testing Excel.

AC

February 8, 2019Hi my disk cache option is grayed out but not the Ram cache? Any reason why this may be? This is for a non persistent random pool. Thanks.

George Spiers

February 9, 2019On-premises I assume? Are you using shared storage or storage local to the hypervisor host? What OS is the Gold Image running?

LUIS

April 11, 2019Configure your connection add select storage for temporary cache + Perform tuning during one week check bandwith, latency, IOPS

Nick Casagrande

May 3, 2019great article, i have this enabled on my catalog. how can i be sure it is working correctly? is there a specific perf counter i can view while performing a file copy test or something?

George Spiers

May 3, 2019Thanks. Yes, there are MCS IO Windows Performance Monitor counters you can use to track cache usage.

Andrey

November 20, 2019Hi George,

The new machine in the MCS+IO catalog has a temporary thin disk. But over time, it grows and becomes thick. After rebooting the machine, its size is saved and takes place on the datastore.

You do not know why this is done?

I think it would be better if the temporary disk returned to its original state, just like the Diff disk in MCS (without IO).

George Spiers

December 3, 2019This is normal, you won’t see is shrink from a Hypervisor level, however the disk contents should be somewhat cleared between reboots.

Kevin phillips

February 13, 2020We have just upgraded from 7.15 to 7.1912 LTSR. I don’t want to enable the MCS IO optimisations yet, because that is in the next stage of testing. However I am finding that after a few hours of users logging in and logging off, the IOPS are increasingly dramatically. Has anybody else noticed this when they upgrade the VDA. If i revert the VDA back to 7.15LTSR CU3, then it works as expcted

Anonymous

February 13, 2020id try it around 7.17 i believe and it was a mess, didnt work at all and made things worse after it became a file system driver, before it was block level i believe. others told me the same, its a dumpster fire

Anonymous

March 24, 2020Im’ also upgrading from 7.15 to 7.1912 LTSR. I noticed that if i reboot the server workload (not from studio, but from inside the guest OS itself) it keeps all the changes and not discard them.

With 7.15 LTSR CU4 instead i coult restart the guest OS both from studio and from the gust OS itself and il cleares all the changes….

I would like to keep this behaviour… is it possible ? thank you

Anonymous

April 15, 2020We have noticed that ever since our 1903 upgrade, the WriteCache disk is NAMED (“WCDisk” I think, if you look in Disk Management), and it is EXPOSED to the User in their VDI session (Drive “E” in most cases). I don’t recall the WriteCace disk being exposed prior to 1903, and I think it was Unformatted, too; is this correct? If so, what changed so that this disk is exposed? And we’re trying a GPP, “Drive Maps”, to hide “E” from Users; is that the best way to do this?

George Spiers

September 7, 2020Correct, since 1903 the write cache container is now file based, like Citrix Provisioning. A write cache disk is created and formatted automatically when you first boot your VDA and the disk is named as you noticed, MCSWCDisk.

To hide a specific drive letter, see: https://support.microsoft.com/en-gb/help/231289/using-group-policy-objects-to-hide-specified-drives

Anonymous

April 15, 2020it changed to a file system driver just like pvs, you can hide it with wem if you really need to

Anonymous

April 15, 2020Where can I read more about this File System driver, especially how/why it started exposing the WriteCache disk?

Anonymous

April 15, 2020its on docs.citrix.com, i dont remember exactly where, but there’s really not much to read about it. they cloned the pvs feature and its a drive letter now in the o/s. not really much more to say about it honestly. seeing the disk is completely normal though.

Anonymous

January 6, 2021Using 1912 LTSR- MCSIO storage optimizations:

Under the performance counter, we do not see “Citrix MCS storage driver” but we see only “Citrix MCS”.

ollivetti@gmx.at

February 10, 2021Hello, same here. After upgrading to 1912cu2 I don’t have my perfmon numbers for

MCS Cache Memory Used

Cache Disk Used

How can I now monitor the size? I noticed the new Disk shown.

Regards

René

May 3, 2021Hello…

maybe one interessting question. if you have an all flash system, how important or usefull is the disk cache and ram cache? think in this scenario it doesn’t matter, or?!

Blake

November 3, 2021I would be curious on this as well if anyone has any insight.

Markus

April 5, 2022Hi…

is it possible to clear the cache disk?

on my installation with cache disk 20GB is running full end the its the end of working.

I can do a new 30GB cache disk, but this run full in the future too…

thanks in advance,

markus

Carl Wallace

July 24, 2023Is it possible to reconfigure the VM’s in a Machine Catalog to use Disk Cache, if they weren’t originally set up that way?